Reviving a Dead Audio Format: The Return of ZZM

Long-time readers will know that my first video game love was the text-mode video game slash creation studio ZZT. One feature of this game is the ability to play simple music through the PC speaker, and back in the day, I remember that the format “ZZM” existed, so you could enjoy the square wave tunes outside of the games. But imagine my surprise in 2025 to find that, while the Museum of ZZT does have a ZZM Audio section, it recommends that nobody use the format anymore; because nobody’s made a player that doesn’t require MS-DOS. Let’s fix that by making a player with way higher system requirements, using everyone’s favorite coding environment: Javascript.

“Hey, I don’t care about all this garbage!”

Here’s the code, go wild. For everyone else, here is the blog post.

ZZT and the PC Speaker

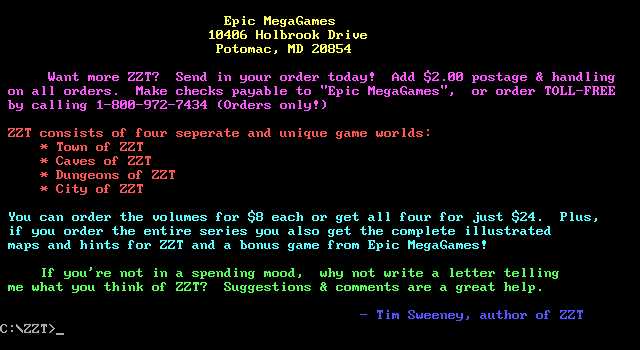

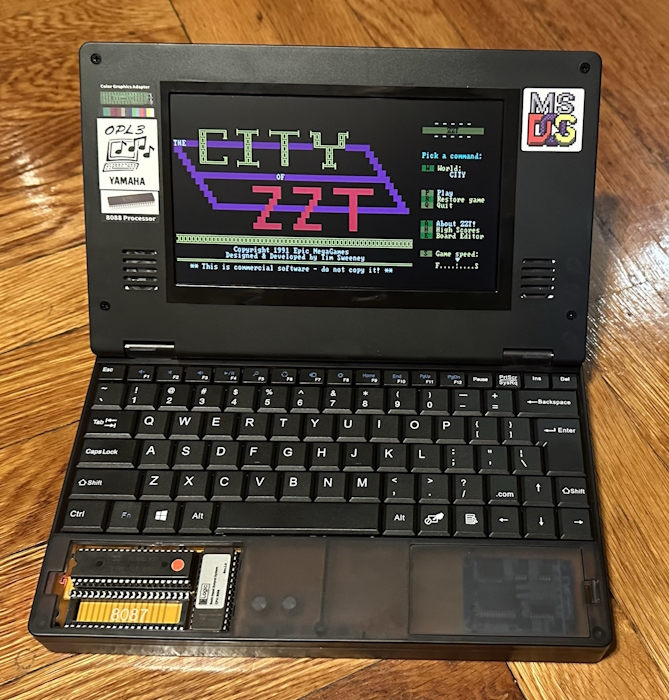

Despite being a game from 1990, Epic MegaGames’ (yes, it is that Epic, but it’s less Mega today) ZZT is really designed around what was the bare-minimum PC specs: 640kiB of RAM, and it even works on an Intel 8088– my Book 8088 is used to play basically nothing else.

That includes the audio, which uses nothing more than the PC Speaker. That makes it a little hard to record; pretty much all the authentic real hardware (tm) I have that can run ZZT pumps the PC speaker directly into a beeper, including the Book 8088.

So what kind of music capabilities did ZZT have? Since the goal of ZZT was also to allow players to create their own game world, it’s documented in the unofficial manual (look up the #play command), but a bit loosely. For example, here’s all it says on notes:

Notes and rests:

X Rest

A-G Piano notes (can be followed by:

# sharp

! flat

There are + and - to move up and down an octave, but what’s the base octave? What frequency are the notes? Just open ZZT and mess around, it’s enough for a game maker to make a little tune for the title screen.

But ZZM!

ZZM was not made by Epic MegaGames. I believe it originated by ZZT community member Jacob Hammond around the turn of the millennium. And long-time ZZT enthusiasts will know that by that point, the source code for ZZT had been lost– even if Tim Sweeney himself had wanted to, he couldn’t provide it. Therefore, Hammond had to manually reimplement ZZT audio from scratch. Oof.

So what is a ZZM file?

The core of the ZZM environment is the “ZZM Music Ripper”. Essentially, this is a simple program that goes through a .ZZT file looking for all objects, and then digs through their code looking for #play commands. These are shuffled together into a file.

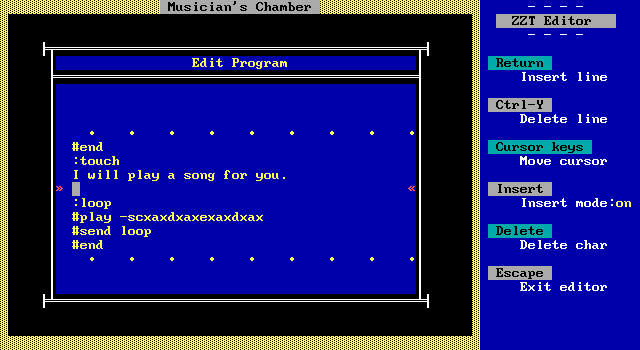

Don’t believe me? Let’s do it ourselves. I wrote up the loop that forms the first few notes of Right, the Area 4 theme from Aspect Legend. Of course the actual track used Game Boy style audio and has more channels.

And here’s what it sounds like. This is DOSBox audio output, mind you. Sped up like this, you can really see how Spaceless, the Area 1 theme of Space Ava, started from a similar place.

To rip the track, I used ZZT Music Player v2.0, since it’s the only version that had support in DOSBox.

; ZZT Music File v1.0

; $TITLE Aspect Legend v0.00

; $GENDATE 01-16-2025

; $GENTIME 18:33:23

; $SONGS BEGIN

; $SONG 1

-scxaxdxaxexaxdxax

; $SONG ENDS

; $SONGS END

; $EOF

We will note a few things right away.

- This is a plain text file. Special commands seem to be preceded with

;. - Song data itself is on bare lines, and matches the

#playformat. - The loop is gone. That’s because there’s no support for loops in ZZM.

- There are no track titles.

Later versions of ZZM did feature track titles. In fact, the included file ZZTMUS1.ZZM has them, this version of ZZT Music Player just doesn’t support them. Here’s how that file looks, somewhat trimmed down.

ZZTMPLAYer file, version 2.0.

; $TITLE ZZT~Music~Collection~1

; $SONGS BEGIN

; $SONG TITLE 1 Blue~Moon~CD~Player~music

; $SONG 1

Q-EF#GF#EDHEQ444I44

IE+E-E+F#-E+G-E+F#-E+E-E+D-E+E-EB

...

Notice that it has a different version line as well, and titles use ~ in place of spaces. But other files I’ve shown don’t follow this rule; it’s a bit rough and dirty. Titles also seem to be able to be placed wherever in the file. (My guess is that this is because they could be added to a ripped file after the fact)

So I ended up writing a very simple parser. One of the most annoying parts proved to just be stripping out /r; these all use the classic MS-DOS /r/n, and at least on my Mac those show up when loaded up into JS as well.

Cool, cool, but what about the music?

The thing is, all I’ve got is the input to a #play command. Now, in theory, since one can use Zeta to run ZZT in the browser, you could just take those outputs, quickly generate a ZZT file on the fly that plays that on the title screen, and then run Zeta in the background. But that felt pretty silly. Instead, let’s look at how ZZT sound works.

The PC Speaker

The PC Speaker is not the same as the beeper on the Apple ][. On that system, you had to manually pulse the speaker to move its cone in and out, and the frequency you pulse the special address at is the frequency your sound plays at.

The PC Speaker is the next level up of sophistication. It’s not a dedicated sound chip, but uses a channel on the PC’s programmable interval timer. That channel is configured to output a square wave at some frequency; the PC can control the timer to control the frequency. It’s just one channel, and it’s always a square wave. It was possible to be clever and do more complex waveforms, but ZZT doesn’t do that.

Here’s a Pascal documentation on the function Sound, used in ZZT to play the music. It takes a single parameter that is the frequency; you call the related function NoSound to stop it.

Now, how do I know what function ZZT uses, when the source code was gone by the 21st century? Because enthusiasts brought it back. Specifically, Asie’s Reconstruction of ZZT project has painstakingly recreated the original source code, or at least a source code that compiles byte-for-byte to an identical executable. Check out the blog post, this is a real accomplishment of reverse-engineering.

Recreating the ZZT sound engine

Unfortunately, the W3C has not yet seen to add a WebPcSpeaker API to JavaScript. This is a major oversight, and I recommend all Nicole Express readers who are in charge of standardization committees get that rectified as soon as possible. So we have to use the Web Audio API. Like pretty much anything designed for audio in the modern era, the Web Audio API is not based around square waves, it’s based around samples.

Of course, the square wave is probably the easiest wave to convert to samples. Here’s how I did it.

function generateSquareWave(freq, sampleRate, duration) {

const output = new Float32Array(Math.floor(duration * sampleRate));

const period = (1 / freq) * sampleRate;

for (let i = 0; i < output.length; i++) {

const point = i % period;

if (point > (period / 2)) {

output[i] = -1;

} else {

output[i] = 1;

}

}

return output;

}

There are probably even more clever and efficient ways to do it, but a square wave really is just like that. Now, you could argue that a real square wave output by the PC Speaker has slightly different characteristics, since square waves don’t exist in the real world. To which I’d say that the imperfections will still happen in your headphones or sound system, so don’t worry about it. But I don’t have “golden ears”.

It Hertz

Of course, generating a square wave is the easy part. That first parameter, freq, now that’s the hard part. Like many sound engines, ZZT’s relies on a hardcoded internal table. This is generated at startup.

procedure SoundInitFreqTable;

var

octave, note: integer;

freqC1, noteStep, noteBase, ln2: real;

begin

freqC1 := 32.0;

ln2 := Ln(2.0);

noteStep := Exp(ln2 / 12.0);

for octave := 1 to 15 do begin

noteBase := Exp(octave * ln2) * freqC1;

for note := 0 to 11 do begin

SoundFreqTable[octave * 16 + note] := Trunc(noteBase);

noteBase := noteBase * noteStep;

end;

end;

end;

Notice that it starts by declaring 32Hz to be the frequency of the note C1, but the actual first octave value is e^(ln(2)) * fC1. That is to say, the lowest C in ZZT is 64Hz. (You can also note that the array SoundFreqTable has a lot of empty spots that aren’t filled. It’s actually sized to 256 entries, if I read my Pascal correctly)

Modern tuning is based around the note A. Specifically, most western instruments are tuned to 440Hz as the A above middle C, known as “A440”. Follow the ZZT frequency table, and we get that A at 430Hz. Is that a big deal? Baroque instruments used around 415Hz, and the base frequency rose throughout the 20th century, so variation isn’t unheard of– but it will definitely make ZZT sound just a little out of tune relative to most music. Here’s my translation of that to JavaScript.

function initSoundFreqTable() {

let soundFreqTable = [];

const freqC1 = 32;

const ln2 = Math.LN2;

const noteStep = Math.exp(ln2 / 12);

for (let octave = 1; octave <= 15; octave++) {

let noteBase = Math.exp(octave*ln2) * freqC1;

for (let note = 0; note <= 11; note++) {

soundFreqTable[octave * 16 + note] = Math.floor(noteBase);

noteBase *= noteStep;

}

}

return soundFreqTable;

}

Notice I kept the gaps in the frequency table. Not how I’d implement it from scratch, but felt it was safer to just follow the ZZT code. That included using 1 to index my arrays occasionally and having some weird gaps in the array. I probably didn’t need to declare a variable just for Math.LN2, though; it was already constant.

Blazing Pascal

The big function here, though, is the one that decodes the string you pass to the #play command. That function is SoundParse; I’ve left a link because this is a big one to reproduce in full. This actually creates an intermediate format that is later used to actually play the sound. Why?

- Notes can vary from one to two characters (i.e.

CandB#). So the intermediate version has them always have one button. - When writing a track, there is a lot of state that is kept along. Specifically, if you write a

B, the program needs to know the current octave and the current note duration in order to actually figure out what note to play. In the intermediate representation, the full note and duration are decoded for each point.

In my version of this function, I decided that rather than create an intermediate format, I would have the output be a full Float32Array of audio samples that could be passed directly into the Web Audio API.

The SoundParse function surprised me for another reason. I’m not very familiar with the Pascal language; but the language is quite sophisticated in some ways. The whole function is based around a case statement. This isn’t the same as a C switch. It also features pattern matching; Tim Sweeney also used an AdvanceInput function that acts as a closure.

case UpCase(input[1]) of

'T': begin

noteDuration := 1;

AdvanceInput;

end;

{ some additional code removed }

'A'..'G': begin

case UpCase(input[1]) of

'C': begin

noteTone := 0;

AdvanceInput;

end;

'D': begin

noteTone := 2;

AdvanceInput;

end;

{ etc for all other notes }

end;

case UpCase(input[1]) of

'!': begin

noteTone := noteTone - 1;

AdvanceInput;

end;

'#': begin

noteTone := noteTone + 1;

AdvanceInput;

end;

end;

output := output + Chr(noteOctave * $10 + noteTone) + Chr(noteDuration);

end;

Notice that the case can have a clause like 'A'..'G', where all letters between A and G are matched. JavaScript doesn’t have this capability, as far as I know (various proposals have made it to the ECMAScript committee), so for this function I had to diverge quite a bit more from the original. Here’s my take on this code.

Note duration

One last thing. You might wonder how I determined the note duration; after all, in SoundParse the noteDuration is just in multiples of a sixteenth note. But this turns out to be pretty simple. At the very end of SOUNDS.PAS, we see this:

SetIntVec($1C, SoundNewVector);

The 0x1C interrupt is an MS-DOS timer interrupt. This comes from the very same programmable timer that is used to feed the PC speaker, and in most cases, including this one, fires at a rate of once every 55ms. And using that as my sixteenth note duration worked fine. Let’s take a listen. Here’s that short track from earlier.

Drums cause a Code Red

Here’s a longer audio sample, the title screen music from Alexis Janson’s Code Red. Note that this was recorded from DOSBox, which is known to not be perfect at running ZZT, but it sounds good to my ears.

And here’s my take on it. It’s a little louder!

You might hear something a little weird. A little squelchy… or as ZZT calls it, the drums. The manual doesn’t give us much details.

Rythmic Sound FX:

0 Tick

1 Tweet

2 Cowbell

4 High Snare

5 High woodblock

6 Low snare

7 Low tom

8 Low woodblock

9 Bass drum

Notice that “3” is skipped; that’s because 3 is used for triplet notes. But beyond that, we don’t get any description about how these work. It might’ve been fun to just use some samples of real-world drums, but that wouldn’t give the Real ZZT SoundTM.

So I just established that all the Pascal API used by ZZT can do is play particular frequencies. So how did ZZT implement drums?

procedure SoundPlayDrum(var drum: TDrumData);

var

i: integer;

begin

for i := 1 to drum.Len do begin

Sound(drum.Data[i]);

Delay(1);

end;

NoSound;

end;

The drums are just a series of single frequencies played in quick succession, each for 1ms. The TDrumData are generated by another table right here, which just needed a little massaging to convert to JavaScript. I started by using ChatGPT but alas, it really got confused by the 1-indexed arrays.

One thing you’ll note is that it uses the Random function to generate certain drums; specifically, the high snare (just like the Panasonic rhythm machine!) and the two woodblocks. I just used Math.random, but in theory, it seems like the particular characteristics of Turbo Pascal’s random number generator will be reflected in the drum sounds. Maybe that’s why my drums sound just a little squelchier.

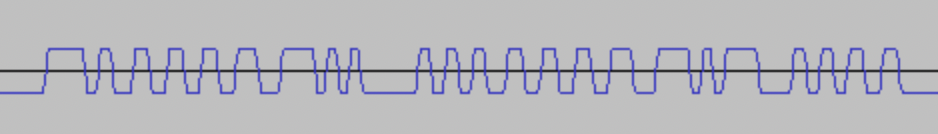

Here’s an example of what a trace of a drum looks like from my ZZT recording. Notice that the quick changes of frequency mean that you really only see one or two peaks of a square wave before the next one starts. (You can also see that the PC Speaker puts rests at the low amplitude, rather than returning to 0)

To see what a difference having the source code makes, here’s how Jacob Hammond’s ZZT Music Player v2.0 plays back the same track. Again this is from DOSBox, which is known to have problems with ZZT Music Player in particular, and in any case I don’t want to be harsh on Hammond here; again, he had to reverse-engineer all of this himself without access to modern tools. This is just for comparison’s sake.